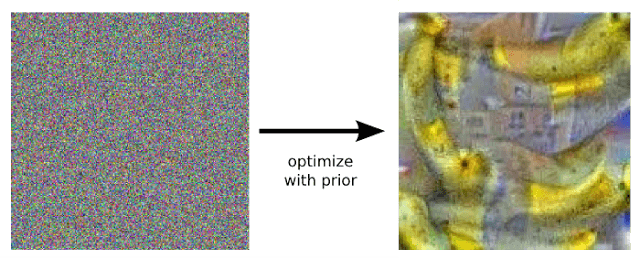

Google Labs has published a very interesting blog regarding using neural networks that were trained to recognize objects to instead paint other objects.

We train an artificial neural network by showing it millions of training examples and gradually adjusting the network parameters until it gives the classifications we want. The network typically consists of 10-30 stacked layers of artificial neurons. Each image is fed into the input layer, which then talks to the next layer, until eventually the “output” layer is reached. The network’s “answer” comes from this final output layer.

They call this "inceptionism" and the results are more than a little bizarre. The following slides show the results of different neural networks "painting" the thing they were trained on even though the source is unrelated, or even random, data. The underlying mechanics are quite complex, but imagine you are seeing how a neural network "sees" the world.

Skyarrow

This is pretty simple, but the network was asked to find every arrow.

Knight

Given a picture of a knight, this neural network finds what it was trained on all over the place: animals galore!

Animal Countryside

This looks like a landscape, but it is made of an insane array of animals and wildlife.

Dog Scream

Edward Munch's iconic piece goes to the dogs. The eyes all over the place are more than a little unsettling.